[ad_1]

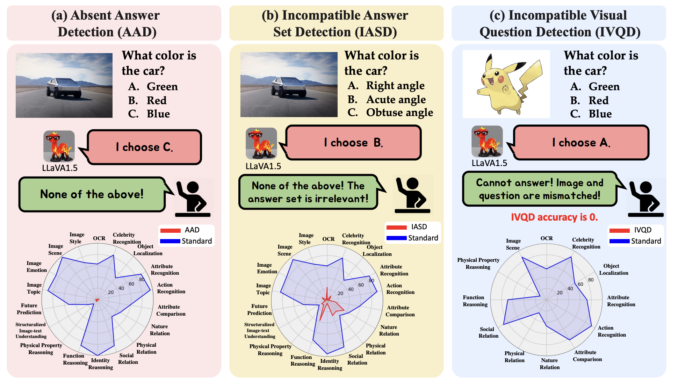

In today’s world, where artificial intelligence is rapidly advancing, Vision Language Models (VLMs) have emerged as a game-changer, pushing the boundaries of machine learning and enabling seamless integration of visual and textual understanding. However, as these models become more powerful, concerns about their reliability and trustworthiness have arisen. To address this, researchers have proposed the novel concept of Unsolvable Problem Detection (UPD) (shown in Figure 1), a task designed to evaluate a VLM’s ability to recognize and refrain from answering when presented with unsolvable or irrelevant questions.

The challenge of UPD stems from the need for VLMs to recognize situations where a question is incompatible with the given image or lacks a viable answer from the provided options. Just as a student would raise their hand when encountering an out-of-place exam question, VLMs must learn to identify and withhold from answering unsolvable problems, thus enhancing their reliability and trustworthiness.

To study and evaluate the performance of VLMs on such unsolvable problems, the researchers have proposed three distinct problem types within UPD:

Absent Answer Detection (AAD): The correct answer is absent from the provided choices, testing the model’s ability to recognize this absence.

Incompatible Answer Set Detection (IASD): IASD evaluates the model’s capacity to identify when the answer set is entirely irrelevant to the context.

Incompatible Visual Question Detection (IVQD). IVQD assesses the model’s understanding of the alignment between visual content and textual questions, challenging it to spot instances where image-question pairs are incompatible.

To explore these problem types, the researchers meticulously adapted the MMBench dataset, creating benchmarks tailored for AAD, IASD, and IVQD. These benchmarks were then used to evaluate the performance of various state-of-the-art VLMs, including LLaVA-1.5-13B, CogVLM-17B, Qwen-VL-Chat, LLaVA-NeXT (13B, 34B), Gemini-Pro, and GPT-4V(vision).

The findings reveal a compelling narrative. Most VLMs struggle to recognize and withhold from answering unsolvable problems, even when their accuracies on standard questions are adequate. While larger models like GPT-4V and LLaVA-Next-34B generally perform better, they still exhibit limitations in certain abilities and settings. For instance, GPT-4V struggles with attribute comparison, nature relation, social relation, and function reasoning scenarios in the AAD setting, while LLaVA-Next-34B falters in object localization tasks.

The researchers explored prompt engineering strategies to improve the performance of VLMs for UPD, such as adding additional options like “None of the above” or instructions to prompt the models to withhold answers. However, the effectiveness of these strategies varied significantly among different VLMs. Adding options proved more effective for LLaVA-1.5 and CogVLM while adding instructions benefited Gemini-Pro and LLaVA-Nexts. Notably, while additional instructions improved the UPD accuracy, they often degraded the standard accuracy, highlighting the difficulty in accurately distinguishing between standard and unsolvable questions.

Additionally, the researchers explored instruction tuning, a training-based approach, which proved more effective than prompt engineering for most settings. However, the AAD performance and performance with smaller VLMs like LLaVA-Next-13B remained challenging, indicating that model size and capacity play a crucial role in UPD performance.

In summary, the research highlights the complexity of the UPD challenge and underscores the necessity for innovative approaches to enhance the trustworthiness of VLMs. While progress has been made, there is still a long road ahead. Future work may explore chain-of-thought reasoning, extension to expert-level questions, and the development of post-hoc detection methods.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

![]()

Vineet Kumar is a consulting intern at MarktechPost. He is currently pursuing his BS from the Indian Institute of Technology(IIT), Kanpur. He is a Machine Learning enthusiast. He is passionate about research and the latest advancements in Deep Learning, Computer Vision, and related fields.

[ad_2]

Source link

Be the first to comment