[ad_1]

Imagine having a digital assistant that can not only answer your questions but also navigate the web, solve complex math problems, write code, and even reason about images and text-based games. Sound too good to be true? Well, brace yourselves because the future of artificial intelligence just got a whole lot more accessible and transparent with the introduction of LUMOS.

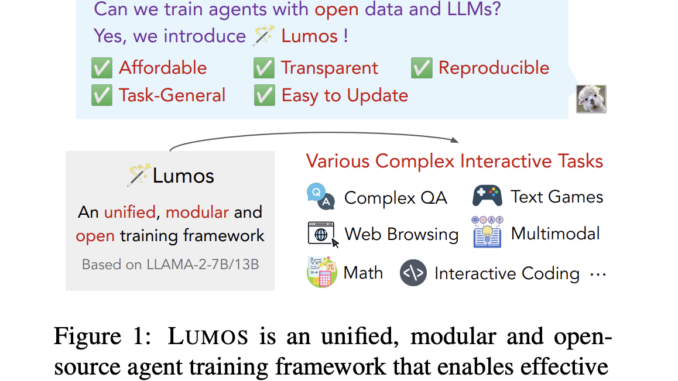

In a groundbreaking development, researchers from the Allen Institute for AI, UCLA, and the University of Washington have unveiled LUMOS, an open-source framework that promises to revolutionize the way we interact with language agents. Unlike existing closed-source solutions that often feel like black boxes, LUMOS offers an unprecedented level of affordability, transparency, and reproducibility, making it a game-changer in the world of AI.

But what exactly is LUMOS, and why is it causing such a stir in the AI community? Buckle up, because we’re about to dive into the nitty-gritty details of this remarkable innovation, exploring how it works, what it can do, and why it matters more than you might think.

Current language agents often rely on large, closed-source language models like GPT-4 or ChatGPT as the core component. While powerful, these models are expensive, need more transparency, and provide limited reproducibility and controllability.

The LUMOS framework takes a different approach by utilizing open-source large language models (LLMs) as the base models. It employs a unified and modular architecture consisting of three key components: a planning module, a grounding module, and an execution module.

The planning module decomposes complex tasks into a sequence of high-level subgoals expressed in natural language. For example, for a multimodal question like “The device in her hand is from which country?”, the planning module might generate two subgoals: “Identify the brand of the device” and “Answer the country of the device brand.”

The grounding module then translates these high-level subgoals into executable low-level actions that can be executed by various tools in the execution module. For instance, the first subgoal might be grounded into an action like “VQA(<img>, What is the brand..?)” to identify the device brand from the image using a visual question-answering tool.

The execution module contains a collection of off-the-shelf tools, including APIs, neural models, and virtual simulators, that can execute the grounded actions. The results of these executed actions are then fed back into the planning and grounding modules, enabling an iterative and adaptive agent behavior.

One of the key advantages of LUMOS is its modular design, which allows for easy upgrades and wider applicability to diverse interactive tasks. By separating the planning, grounding, and execution components, researchers can improve or replace individual modules without affecting the others.

To train LUMOS, the researchers curated a large-scale, high-quality dataset of over 56,000 annotations derived from diverse ground-truth reasoning rationales across various complex interactive tasks, including question answering, mathematics, coding, web browsing, and multimodal reasoning. These annotations were obtained by employing GPT-4 and other advanced language models to convert existing benchmarks into a unified format compatible with the LUMOS architecture. The resulting dataset is one of the largest open-source resources for agent fine-tuning, enabling smaller language models to be trained as language agents effectively.

In evaluations across nine datasets, LUMOS exhibited several key advantages. It outperformed multiple larger open-source agents on held-out datasets for each task type, even surpassing GPT agents on question-answering and web tasks in some cases. LUMOS also outperformed agents produced by other training methods, such as chain-of-thoughts and unmodularized integrated training. LUMOS notably demonstrated impressive generalization capabilities, significantly outperforming 30B-scale (WizardLM-30B and Vicuna-v1.3-33B) and domain-specific agents on unseen tasks involving new environments and actions.

With its open-source nature, competitive performance, and strong generalization abilities, LUMOS represents a significant step forward in developing affordable, transparent, and reproducible language agents for complex interactive tasks.

Check out the Paper, HF Page, and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

![]()

Vibhanshu Patidar is a consulting intern at MarktechPost. Currently pursuing B.S. at Indian Institute of Technology (IIT) Kanpur. He is a Robotics and Machine Learning enthusiast with a knack for unraveling the complexities of algorithms that bridge theory and practical applications.

[ad_2]

Source link

Be the first to comment